The Curious Case of the Driverless U-Turn: When Police Pulled Over a Waymo Robotaxi in San BrunoIn the early hours of a routine DUI enforcement operation in San Bruno, California, officers from the local police department spotted something unusual: a sleek Jaguar I-Pace cruising down the road, executing what appeared to be an illegal U-turn right in front of them. It was September 29, 2025, and as the police activated their lights and sirens, the vehicle obediently pulled over to the side of the road. But when the officers approached, they found no driver behind the wheel—no one to question, no license to check, and certainly no sobriety test to administer. This wasn’t a ghost car; it was a Waymo robotaxi, one of the autonomous vehicles revolutionizing urban transportation. The incident, which quickly made headlines, underscored the growing pains of integrating self-driving technology into everyday life, where human laws meet machine logic.

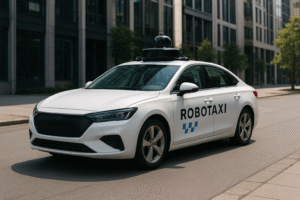

Robotaxis, the driverless shuttles operated by companies like Waymo (a subsidiary of Alphabet Inc.), Cruise, and Tesla, represent the pinnacle of autonomous vehicle technology. These cars rely on a sophisticated array of sensors, cameras, lidar, radar, and artificial intelligence to navigate city streets without human intervention. Waymo, in particular, has been a pioneer, launching its first fully driverless rides in Phoenix, Arizona, back in 2020 and expanding to cities like San Francisco and Los Angeles. By 2025, Waymo’s fleet had grown to thousands of vehicles, offering rides via apps similar to Uber or Lyft, but with no human operator. The promise is clear: safer roads, reduced traffic congestion, and accessibility for those who can’t drive. According to industry reports, autonomous vehicles could prevent up to 90% of accidents caused by human error, such as distracted driving or impairment. Yet, as this San Bruno stop illustrates, the technology isn’t flawless, and neither is the legal framework surrounding it.The details of the September 29 incident paint a picture of a minor traffic violation escalating into a regulatory puzzle. Around midnight, during a heightened DUI patrol aimed at curbing impaired driving, San Bruno police noticed the Waymo vehicle—a modified Jaguar electric SUV—making an unauthorized U-turn on a busy thoroughfare. Such maneuvers are prohibited in many areas to prevent accidents, especially at night when visibility is low. The robotaxi, programmed to follow traffic laws, apparently misjudged the situation, perhaps due to an algorithmic glitch or an misinterpretation of road markings. Officers initiated a stop, and the vehicle complied seamlessly, pulling over safely without any erratic behavior. Body camera footage, later shared by the department, showed officers approaching the empty car, peering inside, and realizing they were dealing with an autonomous entity.

What followed was a moment of confusion that highlighted a key challenge: how do you ticket a robot? The San Bruno Police Department explained in a statement that while the violation was clear, issuing a citation proved impossible under current laws. “Our citation books don’t have a box for ‘robot,’” quipped one officer in a social media post that went viral.

Instead of fining the non-existent driver, the police contacted Waymo’s remote support team, who monitor the fleet from a control center. Waymo representatives confirmed the vehicle’s autonomy and promised to review the incident internally. No ticket was issued, and the robotaxi was allowed to continue its journey after a brief delay. The event lasted less than 10 minutes, but it sparked widespread discussion on platforms like X (formerly Twitter), where users debated everything from AI accountability to the future of policing.

This wasn’t the first time a robotaxi had tangled with law enforcement, but it stands as the most recent documented case as of October 15, 2025. Earlier in the year, in July 2025, a Waymo vehicle in Los Angeles was pulled over for an illegal left turn directly in front of a police cruiser.

Similar to the San Bruno stop, officers approached an empty car and had to liaise with the company rather than a human driver. These incidents echo broader challenges faced by the industry. In 2023, a Cruise robotaxi in San Francisco was involved in a controversial hit-and-run after dragging a pedestrian, leading to a temporary suspension of operations. Tesla’s Robotaxi program, launched in June 2025 in Austin, Texas, has also seen its share of scrutiny, with videos circulating of vehicles phantom braking near stationary police cars or slowing for arrests in progress.

The legal implications are profound. In most U.S. jurisdictions, traffic laws are written with human drivers in mind. California’s Vehicle Code, for instance, requires a “driver” to be responsible for violations, but defining that in an autonomous context is tricky. Waymo and similar companies argue that they, as operators, should bear responsibility, much like a taxi company would for its fleet. However, critics point out loopholes: without a human to penalize on the spot, enforcement becomes bureaucratic, involving corporate fines or software updates rather than immediate deterrence. The National Highway Traffic Safety Administration (NHTSA) has been investigating such cases, pushing for updated regulations that could mandate remote ticketing systems or AI that better recognizes emergency vehicles.

In the San Bruno case, no injuries occurred, but it raised questions about accountability—if a robotaxi causes harm, who pays? Insurance models are evolving, with companies like Waymo self-insuring their fleets, but public trust hinges on transparency.From a technological standpoint, these pull-overs reveal the strengths and limitations of AI driving. Robotaxis excel at following rules consistently; they don’t get tired, drunk, or distracted. Waymo’s system, for example, uses neural networks trained on millions of miles of data to predict road behaviors. Yet, edge cases—like ambiguous U-turn zones or unexpected police interactions—can trip them up. In response to the incident, Waymo stated they were “reviewing the event to improve our mapping and navigation algorithms.”

Training programs for first responders are also ramping up. In Las Vegas, police have been educated on handling Zoox robotaxis, including how to override them in emergencies.

Tesla, meanwhile, has incorporated features in its Full Self-Driving (FSD) software to yield for emergency vehicles, as seen in updates rolled out in October 2025.

Looking ahead, the San Bruno stop could accelerate changes in how society integrates autonomous tech. By 2030, analysts predict robotaxis could dominate urban mobility, potentially reducing car ownership and emissions. But for that to happen, laws must catch up. Proposals include digital citations sent directly to company servers or standardized protocols for police-robotaxi interactions, such as voice commands or app-based overrides. Some cities, like Austin, have already conducted joint training sessions with Tesla to prepare officers for the Robotaxi launch.

Internationally, countries like Australia face additional hurdles, where strict traffic fine systems rely on human accountability, potentially delaying adoption.

In the end, the driverless U-turn in San Bruno was more comedy than crisis—a robot caught in a human world. No one was hurt, and the vehicle resumed service shortly after. Yet, it serves as a reminder that as robotaxis proliferate, so too must our understanding of shared roads. The next time a cop pulls over a self-driving car, it might not be so novel; it could be the norm in a future where machines drive us forward, one careful stop at a time. As one X user humorously pondered, “If a robotaxi evades police, is it resisting without violence?”

The answer, like the technology itself, is still evolving.

Leave a Reply